James C. Scott

Two Cheers for Anarchism

Six Easy Pieces on Autonomy, Dignity, and Meaningful Work and Play

An Anarchist Squint, or Seeing Like an Anarchist

An Anarchist Squint at the Practice of Social Science

One: The Uses of Disorder and “Charisma”

Fragment 1: Scott’s Law of Anarchist Calisthenics

Fragment 2: On the Importance of Insubordination

Fragment 3: More on Insubordination

Fragment 4: Advertisement: “Leader looking for followers, willing to follow your lead”

Two: Vernacular Order, Official Order

Fragment 5: Vernacular and Official Ways of “Knowing”

Fragment 6: Official Knowledge and Landscapes of Control

Fragment 7: The Resilience of the Vernacular

Fragment 8: The Attractions of the Disorderly City

Fragment 9: The Chaos behind Neatness

Fragment 10: The Anarchist’s Sworn Enemy

Three: The Production of Human Beings

Fragment 11: Play and Openness

Fragment 12: It’s Ignorance, Stupid! Uncertainty and Adaptability

Fragment 13: GHP: The Gross Human Product

Fragment 14: A Caring Institution

Fragment 15: Pathologies of the Institutional Life

Fragment 16: A Modest, Counterintuitive Example: Red Light Removal

Four: Two Cheers for the Petty Bourgeoisie

Fragment 17: Introducing a Maligned Class

Fragment 18: The Etiology of Contempt

Fragment 19: Petty Bourgeois Dreams: The Lure of Property

Fragment 20: The Not So Petty Social Functions of the Petty Bourgeoisie

Fragment 21: “Free Lunches” Courtesy of the Petty Bourgeoisie

Fragment 22: Debate and Quality: Against Quantitative Measures of Qualities

Fragment 23: What If … ? An Audit Society Fantasy

Fragment 24: Invalid and Inevitably Corrupt

Fragment 25: Democracy, Merit, and the End of Politics

Fragment 26: In Defense of Politics

Fragment 27: Retail Goodness and Sympathy

Fragment 28: Bringing Particularity, Flux, and Contingency Back In

Preface

The arguments found here have been gestating for a long time, as I wrote about peasants, class conflict, resistance, development projects, and marginal peoples in the hills of Southeast Asia. Again and again over three decades, I found myself having said something in a seminar discussion or having written something and then catching myself thinking, “Now, that sounds like what an anarchist would argue.” In geometry, two points make a line; but when the third, fourth, and fifth points all fall on the same line, then the coincidence is hard to ignore. Struck by that coincidence, I decided it was time to read the anarchist classics and the histories of anarchist movements. To that end, I taught a large undergraduate lecture course on anarchism in an effort to educate myself and perhaps work out my relationship to anarchism. The result, having sat on the back burner for the better part of twenty years after the course ended, is assembled here.

My interest in the anarchist critique of the state was born of disillusionment and dashed hopes in revolutionary change. This was a common enough experience for those who came to political consciousness in the 1960s in North America. For me and many others, the 1960s were the high tide of what one might call a romance with peasant wars of national liberation. I was, for a time, fully swept up in this moment of utopian possibilities. I followed with some awe and, in retrospect, a great deal of naiveté the referendum for independence in Ahmed Sékou Touré’s Guinea, the pan-African initiatives of Ghana’s president, Kwame Nkrumah, the early elections in Indonesia, the independence and first elections in Burma, where I had spent a year, and, of course, the land reforms in revolutionary China and nationwide elections in India.

The disillusionment was propelled by two processes: historical inquiry and current events. It dawned on me, as it should have earlier, that virtually every major successful revolution ended by creating a state more powerful than the one it overthrew, a state that in turn was able to extract more resources from and exercise more control over the very populations it was designed to serve. Here, the anarchist critique of Marx and, especially, of Lenin seemed prescient. The French Revolution led to the Thermadorian Reaction, and then to the precocious and belligerent Napoleonic state. The October Revolution in Russia led to Lenin’s dictatorship of the vanguard party and then to the repression of striking seamen and workers (the proletariat!) at Kronstadt, collectivization, and the gulag. If the ancien régime had presided over feudal inequality with brutality, the record of the revolutions made for similarly melancholy reading. The popular aspirations that provided the energy and courage for the revolutionary victory were, in any long view, almost inevitably betrayed.

Current events were no less disquieting when it came to what contemporary revolutions meant for the largest class in world history, the peasantry. The Viet Minh, rulers in the northern half of Vietnam following the Geneva Accords of 1954, had ruthlessly suppressed a popular rebellion of smallholders and petty landlords in the very areas that were the historical hotbeds of peasant radicalism. In China, it had become clear that the Great Leap Forward, during which Mao, his critics silenced, forced millions of peasants into large agrarian communes and dining halls, was having catastrophic results. Scholars and statisticians still argue about the human toll between 1958 and 1962, but it is unlikely to be less than 35 million people. While the human toll of the Great Leap Forward was being recognized, ominous news of starvation and executions in Kampuchea under the Khmer Rouge completed the picture of peasant revolutions gone lethally awry.

It was not as if the Western bloc and its Cold War policies in poor nations offered an edifying alternative to “real existing socialism.” Regimes and states that presided dictatorially over crushing inequalities were welcomed as allies in the struggle against communism. Those familiar with this period will recall that it also represented the early high tide of development studies and the new field of development economics. If revolutionary elites imagined vast projects of social engineering in a collectivist vein, development specialists were no less certain of their ability to deliver economic growth by hierarchically engineering property forms, investing in physical infrastructure, and promoting cashcropping and markets for land, generally strengthening the state and amplifying inequalities. The “free world,” especially in the Global South seemed vulnerable to both the socialist critique of capitalist inequality and the communist and anarchist critiques of the state as the guarantor of these inequalities.

This twin disillusionment seemed to me to bear out the adage of Mikhail Bakunin: “Freedom without socialism is privilege and injustice; socialism without freedom is slavery and brutality.”

An Anarchist Squint, or Seeing Like an Anarchist

Lacking a comprehensive anarchist worldview and philosophy, and in any case wary of nomothetic ways of seeing, I am making a case for a sort of anarchist squint. What I aim to show is that if you put on anarchist glasses and look at the history of popular movements, revolutions, ordinary politics, and the state from that angle, certain insights will appear that are obscured from almost any other angle. It will also become apparent that anarchist principles are active in the aspirations and political action of people who have never heard of anarchism or anarchist philosophy. One thing that heaves into view, I believe, is what Pierre-Joseph Proudhon had in mind when he first used the term “anarchism,” namely, mutuality, or cooperation without hierarchy or state rule. Another is the anarchist tolerance for confusion and improvisation that accompanies social learning, and confidence in spontaneous cooperation and reciprocity. Here Rosa Luxemburg’s preference, in the long run, for the honest mistakes of the working class over the wisdom of the executive decisions of a handful of vanguard party elites is indicative of this stance. My claim, then, is fairly modest. These glasses, I think, offer a sharper image and better depth of field than most of the alternatives.

In proposing a “process-oriented” anarchist view, or what might be termed anarchism as praxis, the reader might reasonably ask, given the many varieties of anarchism available, what particular glasses I propose to wear.

My anarchist squint involves a defense of politics, conflict, and debate, and the perpetual uncertainty and learning they entail. This means that I reject the major stream of utopian scientism that dominated much of anarchist thought around the turn of the twentieth century. In light of the huge strides in industry, chemistry, medicine, engineering, and transportation, it was no wonder that high modernist optimism on the right and the left led to the belief that the problem of scarcity had, in principle, been solved. Scientific progress, many believed, had uncovered the laws of nature, and with them the means to solve the problems of subsistence, social organization, and institutional design on a scientific basis. As men became more rational and knowledgeable, science would tell us how we should live, and politics would no longer be necessary. Figures as disparate as the comte de Saint-Simon, J. S. Mill, Marx, and Lenin were inclined to see a coming world in which enlightened specialists would govern according to scientific principles and “the administration of things” would replace politics. Lenin saw in the remarkable total mobilization of the German economy in World War I a vision of the smoothly humming machine of the socialist future; one had only to replace the German militarists at the helm of state with the vanguard party of the proletariat, and administration would make politics beside the point. For many anarchists the same vision of progress pointed the way toward an economy in which the state was beside the point. Not only have we subsequently learned both that material plenty, far from banishing politics, creates new spheres of political struggle but also that statist socialism was less “the administration of” things than the trade union of the ruling class protecting its privileges.

Unlike many anarchist thinkers, I do not believe that the state is everywhere and always the enemy of freedom. Americans need only recall the scene of the federalized National Guard leading black children to school through a menacing crowd of angry whites in Little Rock, Arkansas, in 1957 to realize that the state can, in some circumstances, play an emancipatory role. I believe that even this possibility has arisen only as a result of the establishment of democratic citizenship and popular suffrage by the French Revolution, subsequently extended to women, domestics, and minorities. That means that of the roughly five-thousand-year history of states, only in the last two centuries or so has even the possibility arisen that states might occasionally enlarge the realm of human freedom. The conditions under which such possibilities are occasionally realized, I believe, occur only when massive extra-institutional disruption from below threatens the whole political edifice. Even this achievement is fraught with melancholy, inasmuch as the French Revolution also marked the moment when the state won direct, unmediated access to the citizen and when universal conscription and total warfare became possible as well.

Nor do I believe that the state is the only institution that endangers freedom. To assert so would be to ignore a long and deep history of pre-state slavery, property in women, warfare, and bondage. It is one thing to disagree utterly with Hobbes about the nature of society before the existence of the state (nasty, brutish, and short) and another to believe that “the state of nature” was an unbroken landscape of communal property, cooperation, and peace.

The last strand of anarchist thought I definitely wish to distance myself from is the sort of libertarianism that tolerates (or even encourages) great differences in wealth, property, and status. Freedom and (small “d”) democracy are, in conditions of rampant inequality, a cruel sham as Bakunin understood. There is no authentic freedom where huge differences make voluntary agreements or exchanges nothing more than legalized plunder. Consider, for example, the case of interwar China, when famine and war made starvation common. Many women faced the stark choice of either starving or selling their children and living. For a market fundamentalist, selling a child is, after all, a voluntary choice, and therefore an act of freedom, the terms of which are valid (pacta sunt servanda). The logic, of course, is monstrous. It is the coercive structure of the situation in this case that impels people into such catastrophic choices.

I have chosen a morally loaded example, but one not all that uncommon today. The international trade in body parts and infants is a case in point. Picture a time-lapse photograph of the globe tracing the worldwide movement of kidneys, corneas, hearts, bone marrow, lungs, and babies. They all move inexorably from the poorest nations of the globe, and from the poorest classes within them, largely to the rich nations of the North Atlantic and the most privileged within them. Jonathan Swift’s “Modest Proposal” was not far off the mark. Can anyone doubt that this trade in precious goods is an artifact of a huge and essentially coercive imbalance of life chances in the world, what some have called, entirely appropriately, in my view, “structural violence”?

The point is simply that huge disparities in wealth, property, and status make a mockery of freedom. The consolidation of wealth and power over the past forty years in the United States, mimicked more recently in many states in the Global South following neoliberal policies, has created a situation that the anarchists foresaw. Cumulative inequalities in access to political influence via sheer economic muscle, huge (statelike) oligopolies, media control, campaign contributions, the shaping of legislation (right down to designated loopholes), redistricting, access to legal knowledge, and the like have allowed elections and legislation to serve largely to amplify existing inequalities. It is hard to see any plausible way in which such self-reinforcing inequalities could be reduced through existing institutions, in particular since even the recent and severe capitalist crisis beginning in 2008 failed to produce anything like Roosevelt’s New Deal. Democratic institutions have, to a great extent, become commodities themselves, offered up for auction to the highest bidder.

The market measures influence in dollars, while a democracy, in principle, measures votes. In practice, at some level of inequality, the dollars infect and overwhelm the votes. Reasonable people can disagree about the levels of inequality that a democracy can tolerate without becoming an utter charade. My judgment is that we have been in the “charade zone” for quite some time. What is clear to anyone except a market fundamentalist (of the sort who would ethically condone a citizen’s selling himself—voluntarily, of course—as a chattel slave) is that democracy is a cruel hoax without relative equality. This, of course, is the great dilemma for an anarchist. If relative equality is a necessary condition of mutuality and freedom, how can it be guaranteed except through the state? Facing this conundrum, I believe that both theoretically and practically, the abolition of the state is not an option. We are stuck, alas, with Leviathan, though not at all for the reasons Hobbes had supposed, and the challenge is to tame it. That challenge may well be beyond our reach.

The Paradox of Organization

Much of what anarchism has to teach us concerns how political change, both reformist and revolutionary, actually happens, how we should understand what is “political,” and finally how we ought to go about studying politics.

Organizations, contrary to the usual view, do not generally precipitate protest movements. In fact, it is more nearly correct to say that protest movements precipitate organizations, which in turn usually attempt to tame protest and turn it into institutional channels. So far as system-threatening protests are concerned, formal organizations are more an impediment than a facilitator. It is a great paradox of democratic change, though not so surprising from behind an anarchist squint, that the very institutions designed to avoid popular tumults and make peaceful, orderly legislative change possible have generally failed to deliver. This is in large part because existing state institutions are both sclerotic and at the service of dominant interests, as are the vast majority of formal organizations that represent established interests. The latter have a chokehold on state power and institutionalized access to it.

Episodes of structural change, therefore, tend to occur only when massive, noninstitutionalized disruption in the form of riots, attacks on property, unruly demonstrations, theft, arson, and open defiance threatens established institutions. Such disruption is virtually never encouraged, let alone initiated, even by left-wing organizations that are structurally inclined to favor orderly demands, demonstrations, and strikes that can usually be contained within the existing institutional framework. Opposition institutions with names, office bearers, constitutions, banners, and their own internal governmental routines favor, naturally enough, institutionalized conflict, at which they are specialists.[1]

As Frances Fox Piven and Richard A. Cloward have convincingly shown for the Great Depression in the United States, protests by unemployed and workers in the 1930s, the civil rights movement, the anti–Vietnam War movement, and the welfare rights movement, what success the movements enjoyed was at their most disruptive, most confrontational, least organized, and least hierarchical.[2] It was the effort to stem the contagion of a spreading, noninstitutionalized challenge to the existing order that prompted concessions. There were no leaders to negotiate a deal with, no one who could promise to get people off the streets in return for concessions. Mass defiance, precisely because it threatens the institutional order, gives rise to organizations that try to channel that defiance into the flow of normal politics, where it can be contained. In such circumstances, elites turn to organizations they would normally disdain, an example being Premier Georges Pompidou’s deal with the French Communist Party (an established “player”) promising huge wage concessions in 1968 in order to split the party loyalists off from students and wildcat strikers.

Disruption comes in many wondrous forms, and it seems useful to distinguish them by how articulate they are and whether or not they lay claim to the moral high ground of democratic politics. Thus, disruption aimed at realizing or expanding democratic freedoms—such as abolition, women’s suffrage, or desegregation—articulate a specific claim to occupy the high ground of democratic rights. What about massive disruptions aimed at achieving the eight-hour workday or the withdrawal of troops from Vietnam, or, more nebulous, opposition to neoliberal globalization? Here the objective is still reasonably articulated but the claim to the moral high ground is more sharply contested. Though one may deplore the strategy of the “black bloc” during the “Battle in Seattle” around the World Trade Organization meeting in 1999, smashing storefronts and skirmishing with the police, there is little doubt that without the media attention their quasi-calculated rampage drew, the wider antiglobalization, anti-WTO, anti–International Monetary Fund, anti–World Bank movement would have gone largely unnoticed.

The hardest case, but one increasingly common among marginalized communities, is the generalized riot, often with looting, that is more an inchoate cry of anger and alienation with no coherent demand or claim. Precisely because it is so inarticulate and arises among the least organized sectors of society, it appears more menacing; there is no particular demand to address, nor are there any obvious leaders with whom to negotiate. Governing elites confront a spectrum of options. In the urban riots in Britain in the late summer of 2011, the Tory government’s first response was repression and summary justice. Another political response, urged by Labour figures, was a mixture of urban social reform, economic amelioration, and selective punishment. What the riots undeniably did, however, was get the attention of elites, without which most of the issues underlying the riots would not have been raised to public consciousness, no matter how they were disposed of.

Here again there is a dilemma. Massive disruption and defiance can, under some conditions, lead directly to authoritarianism or fascism rather than reform or revolution. That is always the danger, but it is nonetheless true that extra-institutional protest seems a necessary, though not sufficient, condition for major progressive structural change such as the New Deal or civil rights.

Just as much of the politics that has historically mattered has taken the form of unruly defiance, it is also the case that for subordinate classes, for most of their history, politics has taken a very different extra-institutional form. For the peasantry and much of the early working class historically, we may look in vain for formal organizations and public manifestations. There is a whole realm of what I have called “infrapolitics” because it is practiced outside the visible spectrum of what usually passes for political activity. The state has historically thwarted lower-class organization, let alone public defiance. For subordinate groups, such politics is dangerous. They have, by and large, understood, as have guerrillas, that divisibility, small numbers, and dispersion help them avoid reprisal.

By infrapolitics I have in mind such acts as foot-dragging, poaching, pilfering, dissimulation, sabotage, desertion, absenteeism, squatting, and flight. Why risk getting shot for a failed mutiny when desertion will do just as well? Why risk an open land invasion when squatting will secure de facto land rights? Why openly petition for rights to wood, fish, and game when poaching will accomplish the same purpose quietly? In many cases these forms of de facto self-help flourish and are sustained by deeply held collective opinions about conscription, unjust wars, and rights to land and nature that cannot safely be ventured openly. And yet the accumulation of thousands or even millions of such petty acts can have massive effects on warfare, land rights, taxes, and property relations. The large-mesh net political scientists and most historians use to troll for political activity utterly misses the fact that most subordinate classes have historically not had the luxury of open political organization. That has not prevented them from working microscopically, cooperatively, complicitly, and massively at political change from below. As Milovan Djilas noted long ago,

The slow, unproductive work of disinterested millions, together with the prevention of all work not considered “socialist”, is the incalculable, invisible, and gigantic waste which no communist regime has been able to avoid.[3]

Who can say precisely what role such expressions of disaffection (as captured in the popular slogan, “We pretend to work and they pretend to pay us”) played in the long-run viability of Soviet bloc economies?

Forms of informal cooperation, coordination, and action that embody mutuality without hierarchy are the quotidian experience of most people. Only occasionally do they embody implicit or explicit opposition to state law and institutions. Most villages and neighborhoods function precisely because of the informal, transient networks of coordination that do not require formal organization, let alone hierarchy. In other words, the experience of anarchistic mutuality is ubiquitous. As Colin Ward notes, “far from being a speculative vision of a future society, it is a description of a mode of human experience of everyday life, which operates side-by-side with, and in spite of, the dominant authoritarian trends of our society.”[4]

The big question, and one to which I do not have a definitive answer, is whether the existence, power, and reach of the state over the past several centuries have sapped the independent, self-organizing power of individuals and small communities. So many functions that were once accomplished by mutuality among equals and informal coordination are now state organized or state supervised. As Proudhon, anticipating Foucault, famously put it,

To be ruled is to be kept an eye on, inspected, spied on, regulated, indoctrinated, sermonized, listed and checked off, estimated, appraised, censured, ordered about by creatures without knowledge and without virtues. To be ruled is at every operation, transaction, movement, to be noted, registered, counted, priced, admonished, prevented, reformed, redressed, corrected.[5]

To what extent has the hegemony of the state and of formal, hierarchical organizations undermined the capacity for and the practice of mutuality and cooperation that have historically created order without the state? To what degree have the growing reach of the state and the assumptions behind action in a liberal economy actually produced the asocial egoists that Hobbes thought Leviathan was designed to tame? One could argue that the formal order of the liberal state depends fundamentally on a social capital of habits of mutuality and cooperation that antedate it, which it cannot create and which, in fact, it undermines. The state, arguably, destroys the natural initiative and responsibility that arise from voluntary cooperation. Further, the neoliberal celebration of the individual maximizer over society, of individual freehold property over common property, of the treatment of land (nature) and labor (human work life) as market commodities, and of monetary commensuration in, say, cost-benefit analysis (e.g., shadow pricing for the value of a sunset or an endangered view) all encourage habits of social calculation that smack of social Darwinism.

I am suggesting that two centuries of a strong state and liberal economies may have socialized us so that we have largely lost the habits of mutuality and are in danger now of becoming precisely the dangerous predators that Hobbes thought populated the state of nature. Leviathan may have given birth to its own justification.

An Anarchist Squint at the Practice of Social Science

The populist tendency of anarchist thought, with its belief in the possibilities of autonomy, self-organization, and cooperation, recognized, among other things, that peasants, artisans, and workers were themselves political thinkers. They had their own purposes, values, and practices, which any political system ignored at its peril. That basic respect for the agency of nonelites seems to have been betrayed not only by states but also by the practice of social science. It is common to ascribe to elites particular values, a sense of history, aesthetic tastes, even rudiments of a political philosophy. The political analysis of nonelites, by contrast, is often conducted, as it were, behind their backs. Their “politics” is read off their statistical profile: from such “facts” as their income, occupation, years of schooling, property holding, residence, race, ethnicity, and religion.

This is a practice that most social scientists would never judge remotely adequate to the study of elites. It is curiously akin both to state routines and to left-wing authoritarianism in treating the nonelite public and “masses” as ciphers of their socioeconomic characteristics, most of whose needs and worldview can be understood as a vector sum of incoming calories, cash, work routines, consumption patterns, and past voting behavior. It is not that such factors are not germane. What is inadmissible, both morally and scientifically, is the hubris that pretends to understand the behavior of human agents without for a moment listening systematically to how they understand what they are doing and how they explain themselves. Again, it is not that such self-explanations are transparent and nor are they without strategic omissions and ulterior motives—they are no more transparent that the self-explanations of elites.

The job of social science, as I see it, is to provide, provisionally, the best explanation of behavior on the basis of all the evidence available, including especially the explanations of the purposive, deliberating agents whose behavior is being scrutinized. The notion that the agent’s view of the situation is irrelevant to this explanation is preposterous. Valid knowledge of the agent’s situation is simply inconceivable without it. No one has put the case better for the phenomenology of human action than John Dunn:

If we wish to understand other people and propose to claim that we have in fact done so, it is both imprudent and rude not to attend to what they say…. What we cannot properly do is to claim to know that we understand him [an agent] or his action better than he does himself without access to the best descriptions which he is able to offer.[6]

Anything else amounts to committing a social science crime behind the backs of history’s actors.

A Caution or Two

The use of the term “fragments” within the chapters is intended to alert the reader to what not to expect. “Fragments” is meant here in a sense more akin to “fragmentary.” These fragments of text are not like all the shards of a once intact pot that has been thrown to the ground or the pieces of a jigsaw puzzle that, when reassembled, will restore the vase or tableau to its original, whole condition. I do not, alas, have an elaborately worked-out argument for anarchism that would amount to an internally consistent political philosophy starting from first principles that might be compared, say, with that of Prince Kropotkin or Isaiah Berlin, let alone John Locke or Karl Marx. If the test for calling myself an anarchist thinker is having that level of ideological rigor, then I would surely fail it. What I do have and offer here is a series of aperçus that seem to me to add up to an endorsement of much that anarchist thinkers have had to say about the state, about revolution, and about equality.

Neither is this book an examination of anarchist thinkers or anarchist movements, however enlightening that might potentially be. Thus the reader will not find a detailed examination of, say, Proudhon, Bakunin, Malatesta, Sismondi, Tolstoy, Rocker, Tocqueville, or Landauer, though I have consulted the writing of most theorists of anarchy. Nor, again, will the reader find an account of anarchist or quasi-anarchist movements: of, say, Solidarnosc in Poland, the anarchists of Civil War Spain, or the anarchist workers of Argentina, Italy, or France—though I have read as much as I could about “real existing anarchism” as about its major theorists.

“Fragments” has a second sense as well. It represents, for me at any rate, something of an experiment in style and presentation. My two previous books (Seeing Like a State and The Art of Not Being Governed) were constructed more or less like elaborate and heavy siege engines in some Monty Python send-up of medieval warfare. I worked from outlines and diagrams on many sixteen-foot rolls of paper with thousands of minute notations to references. When I happened to mention to Alan MacFarlane that I was unhappy with my ponderous writing habits, he put me on to the techniques of essayist Lafcadio Hearn and a more intuitive, free form of composition that begins like a conversation, starting with the most arresting or gripping kernel of an argument and then elaborating, more or less organically, on that kernel. I have tried, with far fewer ritual bows to social science formulas than is customary, even for my idiosyncratic style, to follow his advice in the hope that it would prove more reader-friendly—surely something to aim for in a book with an anarchist bent.

One: The Uses of Disorder and “Charisma”

Fragment 1: Scott’s Law of Anarchist Calisthenics

I invented this law in Neubrandenburg, Germany, in the late summer of 1990.

In an effort to improve my barely existing German-language skills before spending a year in Berlin as a guest of the Wissenschaftskolleg, I hit on the idea of finding work on a farm rather than attending daily classes with pimply teenagers at a Goethe Institut center. Since the Wall had come down only a year earlier, I wondered whether I might be able to find a six-week summer job on a collective farm (landwirtschaftliche Produktionsgenossenschaft, or LPG), recently styled “cooperative,” in eastern Germany. A friend at the Wissenschaftskolleg had, it turned out, a close relative whose brother-in-law was the head of a collective farm in the tiny village of Pletz. Though wary, the brother-in-law was willing to provide room and board in return for work and a handsome weekly rent.

As a plan for improving my German by the sink-or-swim method, it was perfect; as a plan for a pleasant and edifying farm visit, it was a nightmare. The villagers and, above all, my host were suspicious of my aims. Was I aiming to pore over the accounts of the collective farm and uncover “irregularities”? Was I an advance party for Dutch farmers, who were scouting the area for land to rent in the aftermath of the socialist bloc’s collapse?

The collective farm at Pletz was a spectacular example of that collapse. Its specialization was growing “starch potatoes.” They were no good for pommes frites, though pigs might eat them in a pinch; their intended use, when refined, was to provide the starch base for Eastern European cosmetics. Never had a market flatlined as quickly as the market for socialist bloc cosmetics the day after the Wall was breached. Mountain after mountain of starch potatoes lay rotting beside the rail sidings in the summer sun.

Besides wondering whether utter penury lay ahead for them and what role I might have in it, for my hosts there was the more immediate question of my frail comprehension of German and the danger it posed for their small farm. Would I let the pigs out the wrong gate and into a neighbor’s field? Would I give the geese the feed intended for the bulls? Would I remember always to lock the door when I was working in the barn in case the Gypsies came? I had, it is true, given them more than ample cause for alarm in the first week, and they had taken to shouting at me in the vain hope we all seem to have that yelling will somehow overcome any language barrier. They managed to maintain a veneer of politeness, but the glances they exchanged at supper told me their patience was wearing thin. The aura of suspicion under which I labored, not to mention my manifest incompetence and incomprehension, was in turn getting on my nerves.

I decided, for my sanity as well as for theirs, to spend one day a week in the nearby town of Neubrandenburg. Getting there was not simple. The train didn’t stop at Pletz unless you put up a flag along the tracks to indicate that a passenger was waiting and, on the way back, told the conductor that you wanted to get off at Pletz, in which case he would stop specially in the middle of the fields to let you out. Once in the town I wandered the streets, frequented cafes and bars, pretended to read German newspapers (surreptitiously consulting my little dictionary), and tried not to stick out.

The once-a-day train back from Neubrandenburg that could be made to stop at Pletz left at around ten at night. Lest I miss it and have to spend the night as a vagrant in this strange city, I made sure I was at the station at least half an hour early. Every week for six or seven weeks the same intriguing scene was played out in front of the railroad station, giving me ample time to ponder it both as observer and as participant. The idea of “anarchist calisthenics” was conceived in the course of what an anthropologist would call my participant observation.

Outside the station was a major, for Neubrandenburg at any rate, intersection. During the day there was a fairly brisk traffic of pedestrians, cars, and trucks, and a set of traffic lights to regulate it. Later in the evening, however, the vehicle traffic virtually ceased while the pedestrian traffic, if anything, swelled to take advantage of the cooler evening breeze. Regularly between 9:00 and 10:00 p.m. there would be fifty or sixty pedestrians, not a few of them tipsy, who would cross the intersection. The lights were timed, I suppose, for vehicle traffic at midday and not adjusted for the heavy evening foot traffic. Again and again, fifty or sixty people waited patiently at the corner for the light to change in their favor: four minutes, five minutes, perhaps longer. It seemed an eternity. The landscape of Neubrandenburg, on the Mecklenburg Plain, is flat as a pancake. Peering in each direction from the intersection, then, one could see a mile of so of roadway, with, typically, no traffic at all. Very occasionally a single, small Trabant made its slow, smoky way to the intersection.

Twice, perhaps, in the course of roughly five hours of my observing this scene did a pedestrian cross against the light, and then always to a chorus of scolding tongues and fingers wagging in disapproval. I too became part of the scene. If I had mangled my last exchange in German, sapping my confidence, I stood there with the rest for as long as it took for the light to change, afraid to brave the glares that awaited me if I crossed. If, more rarely, my last exchange in German had gone well and my confidence was high, I would cross against the light, thinking, to buck up my courage, that it was stupid to obey a minor law that, in this case, was so contrary to reason.

It surprised me how much I had to screw up my courage merely to cross a street against general disapproval. How little my rational convictions seemed to weigh against the pressure of their scolding. Striding out boldly into the intersection with apparent conviction made a more striking impression, perhaps, but it required more courage than I could normally muster.

As a way of justifying my conduct to myself, I began to rehearse a little discourse that I imagined delivering in perfect German. It went something like this. “You know, you and especially your grandparents could have used more of a spirit of lawbreaking. One day you will be called on to break a big law in the name of justice and rationality. Everything will depend on it. You have to be ready. How are you going to prepare for that day when it really matters? You have to stay ‘in shape’ so that when the big day comes you will be ready. What you need is ‘anarchist calisthenics.’ Every day or so break some trivial law that makes no sense, even if it’s only jaywalking. Use your own head to judge whether a law is just or reasonable. That way, you’ll keep trim; and when the big day comes, you’ll be ready.”

Judging when it makes sense to break a law requires careful thought, even in the relatively innocuous case of jaywalking. I was reminded of this when I visited a retired Dutch scholar whose work I had long admired. When I went to see him, he was an avowed Maoist and defender of the Cultural Revolution, and something of an incendiary in Dutch academic politics. He invited me to lunch at a Chinese restaurant near his apartment in the small town of Wageningen. We came to an intersection, and the light was against us. Now, Wageningen, like Neubrandenburg, is perfectly flat, and one can see for miles in all directions. There was absolutely nothing coming. Without thinking, I stepped into the street, and as I did so, Dr. Wertheim said, “James, you must wait.” I protested weakly while regaining the curb, “But Dr. Wertheim, nothing is coming.” “James,” he replied instantly, “It would be a bad example for the children.” I was both chastened and instructed. Here was a Maoist incendiary with, nevertheless, a fine-tuned, dare I say Dutch, sense of civic responsibility, while I was the Yankee cowboy heedless of the effects of my act on my fellow citizens. Now when I jaywalk I look around to see that there are no children who might be endangered by my bad example.

Toward the very end of my farm stay in Neubrandenburg, there was a more public event that raised the issue of lawbreaking in a more striking way. A little item in the local newspaper informed me that anarchists from West Germany (the country was still nearly a month from formal reunification, or Einheit) had been hauling a huge papier-mâché statue from city square to city square in East Germany on the back of a flatbed truck. It was the silhouette of a running man carved into a block of granite. It was called Monument to the Unknown Deserters of Both World Wars(Denkmal an die unbekannten Deserteure der beiden Weltkriege) and bore the legend, “This is for the man who refused to kill his fellow man.”

It struck me as a magnificent anarchist gesture, this contrarian play on the well-nigh universal theme of the Unknown Soldier: the obscure, “every-infantryman” who fell honorably in battle for his nation’s objectives. Even in Germany, even in very recently ex–East Germany (celebrated as “The First Socialist State on German Soil”), this gesture was, however, distinctly unwelcome. For no matter how thoroughly progressive Germans may have repudiated the aims of Nazi Germany, they still bore an ungrudging admiration for the loyalty and sacrifice of its devoted soldiers. The Good Soldier Švejk, the Czech antihero who would rather have his sausage and beer near a warm fire than fight for his country, may have been a model of popular resistance to war for Bertolt Brecht, but for the city fathers of East Germany’s twilight year, this papier-mâché mockery was no laughing matter. It came to rest in each town square only so long as it took for the authorities to assemble and banish it. Thus began a merry chase: from Magdeburg to Potsdam to East Berlin to Bitterfeld to Halle to Leipzig to Weimar to Karl-Marx-Stadt (Chemnitz) to Neubrandenburg to Rostock, ending finally back in the then federal capital, Bonn. The city-to-city scamper and the inevitable publicity it provoked may have been precisely what its originators had in mind.

The stunt, aided by the heady atmosphere in the two years following the breach in the Berlin Wall, was contagious. Soon, progressives and anarchists throughout Germany had created dozens of their own municipal monuments to desertion. It was no small thing that an act traditionally associated with cowards and traitors was suddenly held up as honorable and perhaps even worthy of emulation. Small wonder that Germany, which surely has paid a very high price for patriotism in the service of inhuman objectives, would have been among the first to question publicly the value of obedience and to place monuments to deserters in public squares otherwise consecrated to Martin Luther, Frederick the Great, Bismarck, Goethe, and Schiller.

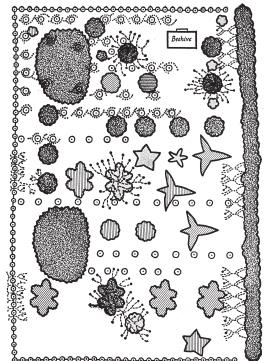

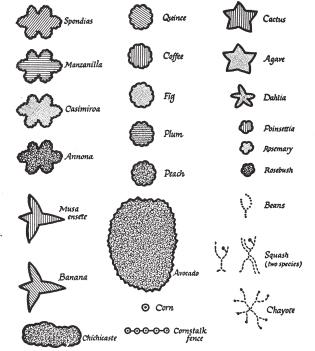

A monument to desertion poses something of a conceptual and aesthetic challenge. A few of the monuments erected to deserters throughout Germany were of lasting artistic value, and one, by Hannah Stuetz Menzel, at Ulm, at least managed to suggest the contagion that such high-stakes acts of disobedience can potentially inspire (fig. 1.1).

Figure 1.1. Memorial for the Unknown Deserter, by Mehmet Aksoy, Potsdam. Photograph courtesy of Volker Moerbitz, Monterey Institute of International Studies

Fragment 2: On the Importance of Insubordination

Acts of disobedience are of interest to us when they are exemplary, and especially when, as examples, they set off a chain reaction, prompting others to emulate them. Then we are in the presence less of an individual act of cowardice or conscience—perhaps both—than of a social phenomenon that can have massive political effects. Multiplied many thousandfold, such petty acts of refusal may, in the end, make an utter shambles of the plans dreamed up by generals and heads of state. Such petty acts of insubordination typically make no headlines. But just as millions of anthozoan polyps create, willy-nilly, a coral reef, so do thousands upon thousands of acts of insubordination and evasion create an economic or political barrier reef of their own. A double conspiracy of silence shrouds these acts in anonymity. The perpetrators rarely seek to call attention to themselves; their safety lies in their invisibility. The officials, for their part, are reluctant to call attention to rising levels of disobedience; to do so would risk encouraging others and call attention to their fragile moral sway. The result is an oddly complicitous silence that all but expunges such forms of insubordination from the historical record.

And yet, such acts of what I have elsewhere called “everyday forms of resistance” have had enormous, often decisive, effects on the regimes, states, and armies at which they are implicitly directed. The defeat of the Confederate states in America’s great Civil War can almost certainly be attributed to a vast aggregation of acts of desertion and insubordination. In the fall of 1862, little more than a year after the war began, there were widespread crop failures in the South. Soldiers, particularly those from the non-slave-holding backcountry, were getting letters from famished families urging them to return home. Many thousands did, often as whole units, taking their arms with them. Having returned to the hills, most of them actively resisted conscription for the duration of the war.

Later, following the decisive Union victory at Missionary Ridge in the winter of 1863, the writing was on the wall and the Confederate forces experienced a veritable hemorrhage of desertions, again, especially from small-holding, up-country recruits who had no direct interest in the preservation of slavery, especially when it seemed likely to cost them their own lives. Their attitude was summed up in a popular slogan of the time in the Confederacy that the war was “A rich man’s war and a poor man’s fight,” a slogan only reinforced by the fact that rich planters with more than twenty slaves could keep one son at home, presumably to ensure labor discipline. All told, something like a quarter of a million eligible draft-age men deserted or evaded service altogether. To this blow, absorbed by a Confederacy already overmatched in manpower, must be added the substantial numbers of slaves, especially from the border states, who ran to the Union lines, many of whom then enlisted in the Union forces. Last, it seems that the remaining slave population, cheered by Union advances and reluctant to exhaust themselves to increase war production, dragged their feet whenever possible and frequently absconded as well to refuges such as the Great Dismal Swamp, along the Virginia–North Carolina border, where they could not be easily tracked. Thousands upon thousands of acts of desertion, shirking, and absconding, intended to be unobtrusive and to escape detection, amplified the manpower and industrial advantage of the Union forces and may well have been decisive in the Confederacy’s ultimate defeat.

Napoleon’s wars of conquest were ultimately crippled by comparable waves of disobedience. While it is claimed that Napoleon’s invading soldiers brought the French Revolution to the rest of Europe in their knapsacks, it is no exaggeration to assert that the limits of these conquests were sharply etched by the disobedience of the men expected to shoulder those knapsacks. From 1794 to 1796 under the Republic, and then again from 1812 under the Napoleonic empire, the difficulty of scouring the countryside for conscripts was crippling. Families, villages, local officials, and whole cantons conspired to welcome back recruits who had fled and to conceal those who had evaded conscription altogether, some by severing one or more fingers of their right hand. The rates of draft evasion and desertion were something of a referendum on the popularity of the regime and, given their strategic importance of these “voters-with-their-feet” to the needs of Napoleon’s quartermasters, the referendum was conclusive. While the citizens of the First Republic and of Napoleon’s empire may have warmly embraced the promise of universal citizenship, they were less enamored of its logical twin, universal conscription.

Stepping back a moment, it’s worth noticing something particular about these acts: they are virtually all anonymous, they do not shout their name. In fact, their unobtrusiveness contributed to their effectiveness. Desertion is quite different from an open mutiny that directly challenges military commanders. It makes no public claims, it issues no manifestos; it is exit rather than voice. And yet, once the extent of desertion becomes known, it constrains the ambitions of commanders, who know they may not be able to count on their conscripts. During the unpopular U.S. war in Vietnam, the reported “fragging” (throwing of a fragmentation grenade) of those officers who repeatedly exposed their men to deadly patrols was a far more dramatic and violent but nevertheless still anonymous act, meant to lessen the deadly risks of war for conscripts. One can well imagine how reports of fragging, whether true or not, might make officers hesitate to volunteer themselves and their men for dangerous missions. To my knowledge, no study has ever looked into the actual incidence of fragging, let alone the effects it may have had on the conduct and termination of the war. The complicity of silence is, in this case as well, reciprocal.

Quiet, anonymous, and often complicitous, lawbreaking and disobedience may well be the historically preferred mode of political action for peasant and subaltern classes, for whom open defiance is too dangerous. For the two centuries from roughly 1650 to 1850, poaching (of wood, game, fish, kindling, fodder) from Crown or private lands was the most popular crime in England. By “popular” I mean both the most frequent and the most heartily approved of by commoners. Since the rural population had never accepted the claim of the Crown or the nobility to “the free gifts of nature” in forests, streams, and open lands (heath, moor, open pasture), they violated those property rights en masse repeatedly, enough to make the elite claim to property rights in many areas a dead letter. And yet, this vast conflict over property rights was conducted surreptitiously from below with virtually no public declaration of war. It is as if villagers had managed, de facto, defiantly to exercise their presumed right to such lands without ever making a formal claim. It was often remarked that the local complicity was such that gamekeepers could rarely find any villager who would serve as state’s witness.

In the historical struggle over property rights, the antagonists on either side of the barricades have used the weapons that most suited them. Elites, controlling the lawmaking machinery of the state, have deployed bills of enclosure, paper titles, and freehold tenure, not to mention the police, gamekeepers, forest guards, the courts, and the gibbet to establish and defend their property rights. Peasants and subaltern groups, having no access to such heavy weaponry, have instead relied on techniques such as poaching, pilfering, and squatting to contest those claims and assert their own. Unobtrusive and anonymous, like desertion, these “weapons of the weak” stand in sharp contrast to open public challenges that aim at the same objective. Thus, desertion is a lower-risk alternative to mutiny, squatting a lower-risk alternative to a land invasion, poaching a lower-risk alternative to the open assertion of rights to timber, game, or fish. For most of the world’s population today, and most assuredly for subaltern classes historically, such techniques have represented the only quotidian form of politics available. When they have failed, they have given way to more desperate, open conflicts such as riots, rebellions, and insurgency. These bids for power irrupt suddenly onto the official record, leaving traces in the archives beloved of historians and sociologists who, having documents to batten on, assign them a pride of place all out of proportion to the role they would occupy in a more comprehensive account of class struggle. Quiet, unassuming, quotidian insubordination, because it usually flies below the archival radar, waves no banners, has no officeholders, writes no manifestos, and has no permanent organization, escapes notice. And that’s just what the practitioners of these forms of subaltern politics have in mind: to escape notice. You could say that, historically, the goal of peasants and subaltern classes has been to stay out of the archives. When they do make an appearance, you can be pretty sure that something has gone terribly wrong.

If we were to look at the great bandwidth of subaltern politics all the way from small acts of anonymous defiance to massive popular rebellions, we would find that outbreaks of riskier open confrontation are normally preceded by an increase in the tempo of anonymous threats and acts of violence: threatening letters, arson and threats of arson, cattle maiming, sabotage and nighttime machine breaking, and so on. Local elites and officials historically knew these as the likely precursors of open rebellion; and they were intended to be read as such by those who engaged in them. Both the frequency of insubordination and its “threat level” (pace the Office of Homeland Security) were understood by contemporary elites as early warning signs of desperation and political unrest. One of the first op-eds of the young Karl Marx noted in great detail the correlation between, on the one hand, unemployment and declining wages among factory workers in the Rhineland, and on the other, the frequency of prosecution for the theft of firewood from private lands.

The sort of lawbreaking going on here is, I think, a special subspecies of collective action. It is not often recognized as such, in large part because it makes no open claims of this kind and because it is almost always self-serving at the same time. Who is to say whether the poaching hunter is more interested in a warm fire and rabbit stew than in contesting the claim of the aristocracy to the wood and the game he has just taken? It is most certainly not in his interest to help the historian with a public account of his motives. The success of his claim to wood and game lies in keeping his acts and motives shrouded. And yet, the long-run success of this lawbreaking depends on the complicity of his friends and neighbors who may believe in his and their right to forest products and may themselves poach and, in any case, will not bear witness against him or turn him in to the authorities.

One need not have an actual conspiracy to achieve the practical effects of a conspiracy. More regimes have been brought, piecemeal, to their knees by what was once called “Irish democracy,” the silent, dogged resistance, withdrawal, and truculence of millions of ordinary people, than by revolutionary vanguards or rioting mobs.

Fragment 3: More on Insubordination

To see how tacit coordination and lawbreaking can mimic the effects of collective action without its inconveniences and dangers, we might consider the enforcement of speed limits. Let’s imagine that the speed limit for cars is 55 miles per hour. Chances are that the traffic police will not be much inclined to prosecute drivers going 56, 57, 58 … even 60 mph, even though it is technically a violation. This “ceded space of disobedience” is, as it were, seized and becomes occupied territory, and soon much of the traffic is moving along at roughly 60 mph. What about 61, 62, 63 mph? Drivers going just a mile or two above the de facto limit are, they reason, fairly safe. Soon the speeds from, say, 60 to 65mph bid fair to become conquered territory as well. All of the drivers, then, going about 65 mph come absolutely to depend for their relative immunity from prosecution on being surrounded by a veritable capsule of cars traveling at roughly the same speed. There is something like a contagion effect that arises from observation and tacit coordination taking place here, although there is no “Central Committee of Drivers” meeting and plotting massive acts of civil disobedience. At some point, of course, the traffic police do intervene to issue fines and make arrests, and the pattern of their intervention sets terms of calculation that drivers must now consider when deciding how fast to drive. The pressure at the upper end of the tolerated speed, however, is always being tested by drivers in a hurry, and if, for whatever reason, enforcement lapses, the tolerated speed will expand to fill it. As with any analogy, this one must not be pushed too far. Exceeding the speed limit is largely a matter of convenience, not a matter of rights and grievances, and the dangers to speeders from the police are comparatively trivial. (If, on the contrary, we had a 55-mph speed limit and, say, only three traffic police for the whole nation, who summarily executed five or six speeders and strung them up along the interstate highways, the dynamic I have described would screech to a halt!)

I’ve noticed a similar pattern in the way that what begin as “shortcuts” in walking paths often end up becoming paved walkways. Imagine a pattern of daily walking trajectories that, were they confined to paved sidewalks, would oblige people to negotiate the two sides of a right triangle rather than striking out along the (unpaved) hypotenuse. Chances are, a few would venture the shortcut and, if not thwarted, establish a route that others would be tempted to take merely to save time. If the shortcut is heavily trafficked and the groundskeepers relatively tolerant, the shortcut may well, over time, come to be paved. Tacit coordination again. Of course, virtually all of the lanes in older cities that grew from smaller settlements were created in precisely this way; they were the formalization of daily pedestrian and cart tracks, from the well to the market, from the church or school to the artisan quarter—a good example of the principle attributed to Chuang Tzu, “We make the path by walking.”

The movement from practice to custom to rights inscribed in law is an accepted pattern in both common and positive law. In the Anglo-American tradition, it is represented by the law of adverse possession, whereby a pattern of trespass or seizure of property, repeated continuously for a certain number of years, can be used to claim a right, which would then be legally protected. In France, a practice of trespass that could be shown to be of long standing would qualify as a custom and, once proved, would establish a right in law.

Under authoritarian rule it seems patently obvious that subjects who have no elected representatives to champion their cause and who are denied the usual means of public protest (demonstrations, strikes, organized social movement, dissident media) would have no other recourse than foot-dragging, sabotage, poaching, theft, and, ultimately, revolt. Surely the institutions of representative democracy and the freedoms of expression and assembly afforded modern citizens make such forms of dissent obsolete. After all, the core purpose of representative democracy is precisely to allow democratic majorities to realize their claims, however ambitious, in a thoroughly institutionalized fashion.

It is a cruel irony that this great promise of democracy is rarely realized in practice. Most of the great political reforms of the nineteenth and twentieth centuries have been accompanied by massive episodes of civil disobedience, riot, lawbreaking, the disruption of public order, and, at the limit, civil war. Such tumult not only accompanied dramatic political changes but was often absolutely instrumental in bringing them about. Representative institutions and elections by themselves, sadly, seem rarely to bring about major changes in the absence of the force majeure afforded by, say, an economic depression or international war. Owing to the concentration of property and wealth in liberal democracies and the privileged access to media, culture, and political influence these positional advantages afford the richest stratum, it is little wonder that, as Gramsci noted, giving the working class the vote did not translate into radical political change.[7] Ordinary parliamentary politics is noted more for its immobility than for facilitating major reforms.

We are obliged; if this assessment is broadly true, to confront the paradox of the contribution of lawbreaking and disruption to democratic political change. Taking the twentieth-century United States as a case in point, we can identify two major policy reform periods, the Great Depression of the 1930s and the civil rights movement of the 1960s. What is most striking about each, from this perspective, is the vital role massive disruption and threats to public order played in the process of reform.

The great policy shifts represented by the institution of unemployment compensation, massive public works projects, social security aid, and the Agricultural Adjustment Act were, to be sure, abetted by the emergency of the world depression. But the way in which the economic emergency made its political weight felt was not through statistics on income and unemployment but through rampant strikes, looting, rent boycotts, quasi-violent sieges of relief offices, and riots that put what my mother would have called “the fear of God” in business and political elites. They were thoroughly alarmed at what seemed at the time to be potentially revolutionary ferment. The ferment in question was, in the first instance, not institutionalized. That is to say, it was not initially shaped by political parties, trade unions, or recognizable social movements. It represented no coherent policy agenda. Instead it was genuinely unstructured, chaotic, and full of menace to the established order. For this very reason, there was no one to bargain with, no one to credibly offer peace in return for policy changes. The menace was directly proportional to its lack of institutionalization. One could bargain with a trade union or a progressive reform movement, institutions that were geared into the institutional machinery. A strike was one thing, a wildcat strike was another: even the union bosses couldn’t call off a wildcat strike. A demonstration, even a massive one, with leaders was one thing, a rioting mob was another. There were no coherent demands, no one to talk to.

The ultimate source of the massive spontaneous militancy and disruption that threatened public order lay in the radical increase in unemployment and the collapse of wage rates for those lucky enough still to be employed. The normal conditions that sustained routine politics suddenly evaporated. Neither the routines of governance nor the routines of institutionalized opposition and representation made much sense. At the individual level, the deroutinization took the form of vagrancy, crime, and vandalism. Collectively, it took the form of spontaneous defiance in riots, factory occupations, violent strikes, and tumultuous demonstrations. What made the rush of reforms possible were the social forces unleashed by the Depression, which seemed beyond the ability of political elites, property owners, and, it should be noted, trade unions and left-wing parties to master. The hand of the elites was forced.

An astute colleague of mine once observed that liberal democracies in the West were generally run for the benefit of the top, say, 20 percent of the wealth and income distribution. The trick, he added, to keeping this scheme running smoothly has been to convince, especially at election time, the next 30 to 35 percent of the income distribution to fear the poorest half more than they envy the richest 20 percent. The relative success of this scheme can be judged by the persistence of income inequality—and its recent sharpening—over more than a half century. The times when this scheme comes undone are in crisis situations when popular anger overflows its normal channels and threatens the very parameters within which routine politics operates. The brutal fact of routine, institutionalized liberal democratic politics is that the interests of the poor are largely ignored until and unless a sudden and dire crisis catapults the poor into the streets. As Martin Luther King, Jr., noted, “a riot is the language of the unheard.” Large-scale disruption, riot, and spontaneous defiance have always been the most potent political recourse of the poor. Such activity is not without structure. It is structured by informal, self-organized, and transient networks of neighborhood, work, and family that lie outside the formal institutions of politics. This is structure alright, just not the kind amenable to institutionalized politics.

Perhaps the greatest failure of liberal democracies is their historical failure to successfully protect the vital economic and security interests of their less advantaged citizens through their institutions. The fact that democratic progress and renewal appear instead to depend vitally on major episodes of extra-institutional disorder is massively in contradiction to the promise of democracy as the institutionalization of peaceful change. And it is just as surely a failure of democratic political theory that it has not come to grips with the central role of crisis and institutional failure in those major episodes of social and political reform when the political system is relegitimated.

It would be wrong and, in fact, dangerous to claim that such large-scale provocations always or even generally lead to major structural reform. They may instead lead to growing repression, the restriction of civil rights, and, in extreme cases, the overthrow of representative democracy. Nevertheless, it is undeniable that most episodes of major reform have not been initiated without major disorders and the rush of elites to contain and normalize them. One may legitimately prefer the more “decorous” forms of rallies and marches that are committed to nonviolence and seek the moral high ground by appealing to law and democratic rights. Such preferences aside, structural reform has rarely been initiated by decorous and peaceful claims.

The job of trade unions, parties, and even radical social movements is precisely to institutionalize unruly protest and anger. Their function is, one might say, to try to translate anger, frustration, and pain into a coherent political program that can be the basis of policy making and legislation. They are the transmission belt between an unruly public and rule-making elites. The implicit assumption is that if they do their jobs well, not only will they be able to fashion political demands that are, in principle, digestible by legislative institutions, they will, in the process, discipline and regain control of the tumultuous crowds by plausibly representing their interests, or most of them, to the policy makers. Those policy makers negotiate with such “institutions of translation” on the premise that they command the allegiance of and hence can control the constituencies they purport to represent. In this respect, it is no exaggeration to say that organized interests of this kind are parasitic on the spontaneous defiance of those whose interests they presume to represent. It is that defiance that is, at such moments, the source of what influence they have as governing elites strive to contain and channel insurgent masses back into the run of normal politics.

Another paradox: at such moments, organized progressive interests achieve a level of visibility and influence on the basis of defiance that they neither incited nor controlled, and they achieve that influence on the presumption they will then be able to discipline enough of that insurgent mass to reclaim it for politics as usual. If they are successful, of course, the paradox deepens, since as the disruption on which they rose to influence subsides, so does their capacity to affect policy.

The civil rights movement in the 1960s and the speed with which both federal voting registrars were imposed on the segregated South and the Voting Rights Act was passed largely fit the same mold. The widespread voter-registration drives, Freedom Rides, and sit-ins were the product of a great many centers of initiative and imitation. Efforts to coordinate, let alone organize, this bevy of defiance eluded many of the ad hoc bodies established for this purpose, such as the Student Non-Violent Coordinating Committee, let alone the older, mainstream civil rights organizations such as the National Association for the Advancement of Colored People, the Congress on Racial Equality, and the Southern Christian Leadership Conference. The enthusiasm, spontaneity, and creativity of the cascading social movement ran far ahead of the organizations wishing to represent, coordinate, and channel it.

Again, it was the widespread disruption, caused in large part by the violent reaction of segregationist vigilantes and public authorities, that created a crisis of public order throughout much of the South. Legislation that had languished for years was suddenly rushed through Congress as John and Robert Kennedy strove to contain the growing riots and demonstrations, their resolve stiffened by the context of the Cold War propaganda war in which the violence in the south could plausibly be said to characterize a racist state. Massive disorder and violence achieved, in short order, what decades of peaceful organizing and lobbying had failed to attain.

I began this essay with the fairly banal example of crossing against the traffic lights in Neubrandenburg. The purpose was not to urge lawbreaking for its own sake, still less for the petty reason of saving a few minutes. My purpose was rather to illustrate how ingrained habits of automatic obedience could lead to a situation that, on reflection, virtually everyone would agree was absurd. Virtually all the great emancipatory movements of the past three centuries have initially confronted a legal order, not to mention police power, arrayed against them. They would scarcely have prevailed had not a handful of brave souls been willing to breach those laws and customs (e.g., through sit-ins, demonstrations, and mass violations of passed laws). Their disruptive actions, fueled by indignation, frustration, and rage, made it abundantly clear that their claims could not be met within the existing institutional and legal parameters. Thus, immanent in their willingness to break the law was not so much a desire to sow chaos as a compulsion to instate a more just legal order. To the extent that our current rule of law is more capacious and emancipatory than its predecessors were, we owe much of that gain to lawbreakers.

Fragment 4: Advertisement: “Leader looking for followers, willing to follow your lead”

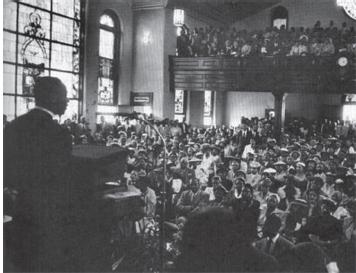

Riots and disruption are not the only way the unheard make their voices felt. There are certain conditions in which elites and leaders are especially attentive to what they have to say, to their likes and dislikes. Consider the case of charisma. It is common to speak of someone possessing charisma in the same way he could be said to have a hundred dollars in his pocket or a BMW in his garage. In fact, of course, charisma is a relationship; it depends absolutely on an audience and on culture. A charismatic performance in Spain or Afghanistan might not be even remotely charismatic in Laos or Tibet. It depends, in other words, on a response, a resonance with those witnessing the performance. And in certain circumstances elites work very hard to elicit that response, to find the right note, to harmonize their message with the wishes and tastes of their listeners and spectators. At rare moments, one can see this at work in real time. Consider the case of Martin Luther King, Jr., for certain audiences perhaps the most charismatic American public political figure of the twentieth century. Thanks to Taylor Branch’s sensitive and detailed biography of King and the movement, we can actually see this searching for the right note at work in real time and in the call-and-response tradition of the African American church. I excerpt, at length, Branch’s account of the speech King gave at the Holt Street YMCA in December 1955, after the conviction of Rosa Parks and on the eve of the Montgomery bus boycott:

“We are here this evening—for serious business,” he said, in even pulses, rising and then falling in pitch. When he paused, only one or two “yes” responses came up from the crowd, and they were quiet ones. It was a throng of shouters he could see, but they were waiting to see where he would take them. [He speaks of Rosa Parks as a fine citizen.]

“And I think I speak with—with legal authority—not that I have any legal authority … that the law has never been totally clarified.” This sentence marked King as a speaker who took care with distinctions, but it took the crowd nowhere. “Nobody can doubt the height of her character, no one can doubt the depth of her Christian commitment.”

“That’s right,” a soft chorus answered.

“And just because she refused to get up, she was arrested,” King repeated. The crowd was stirring now, following King at the speed of a medium walk.

He paused slightly longer.

“And you know, my friends, there comes a time,” he cried, “when people get tired of being trampled over by the iron feet of oppression.”

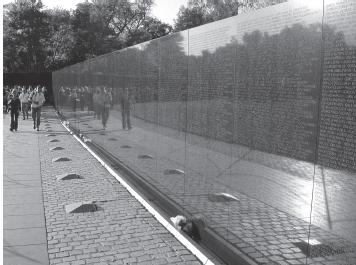

A flock of “Yeses” was coming back at him when suddenly the individual responses dissolved into a rising cheer and applause exploded beneath that cheer—all within the space of a second. The startling noise rolled on and on, like a wave that refused to break, and just when it seemed that the roar must finally weaken, a wall of sound came in from the enormous crowd outdoors to push the volume still higher. Thunder seemed to be added to the lower register—the sound of feet stomping on the wooden floor—until the loudness became something that was not so much heard as sensed by vibrations in the lungs. The giant cloud of noise shook the building and refused to go away. One sentence had set it loose somehow, pushing the call-and-response of the Negro church past the din of a political rally and on to something else that King had never known before. There was a rabbit of enormous proportions in those bushes. As the noise finally fell back, King’s voice rose above it to fire again. “There comes a time, my friends, when people get tired of being thrown across the abyss of humiliation, when they experience the bleakness of nagging despair,” he declared. “There comes a time when people get tired of getting pushed out of the glittering sunlight of life’s July, and left standing amidst the piercing chill of an Alpine November. There—” King was making a new run, but the crowd drowned him out. No one could tell whether the roar came in response to the nerve he had touched or simply out of pride in the speaker from whose tongue such rhetoric rolled so easily. “We are here—we are here because we are tired now,” King repeated [fig. 1.2].[8][9]

Figure 1.2. Dr. Martin Luther King, Jr., delivering his last sermon, Memphis, Tennessee, April 3, 1968. Photograph from blackpast.org

The pattern Branch so vividly depicts here is repeated in the rest of this particular speech and in most of King’s speeches. Charisma is a kind of perfect pitch. King develops a number of themes and a repertoire of metaphors for expressing them. When he senses a powerful response he repeats the theme in a slightly different way to sustain the enthusiasm and elaborate it. As impressive as his rhetorical creativity is, it is utterly dependent on finding the right pitch that will resonate with the deepest emotions and desires of his listeners. If we take a long view of King as a spokesman for the black Christian community, the civil rights movement, and nonviolent resistance (each a somewhat different audience), we can see how, over time, the seemingly passive listeners to his soaring oratory helped write his speeches for him. They, by their responses, selected the themes that made the vital emotional connection, themes that King would amplify and elaborate in his unique way. The themes that resonated grew; those that elicited little response were dropped from King’s repertoire. Like all charismatic acts, it was in two-part harmony.

The key condition for charisma is listening very carefully and responding. The condition for listening very carefully is a certain dependence on the audience, a certain relationship of power. One of the characteristics of great power is not having to listen. Those at the bottom of the heap are, in general, better listeners than those at the top. The daily quality of the lifeworld of a slave, a serf, a sharecropper, a worker, a domestic depends greatly on an accurate reading of the mood and wishes of the powerful, whereas slave owners, landlords, and bosses can often ignore the wishes of their subordinates. The structural conditions that encourage such attentiveness are therefore the key to this relationship. For King, the attentiveness was built into being asked to lead the Montgomery bus boycott and being dependent on the enthusiastic participation of the black community.

To see how such counterintuitive “speechwriting” works in other contexts, let’s imagine a bard in the medieval marketplace who sings and plays music for a living. Let’s assume also, for purposes of illustration, that the bard in question is a “downmarket” performer—that he plays in the poor quarters of the town and is dependent on a copper or two from many of his listeners for his daily bread. Finally, let’s further imagine that the bard has a repertoire of a thousand songs and is new to the town.